Making my Own Transformer Language Model

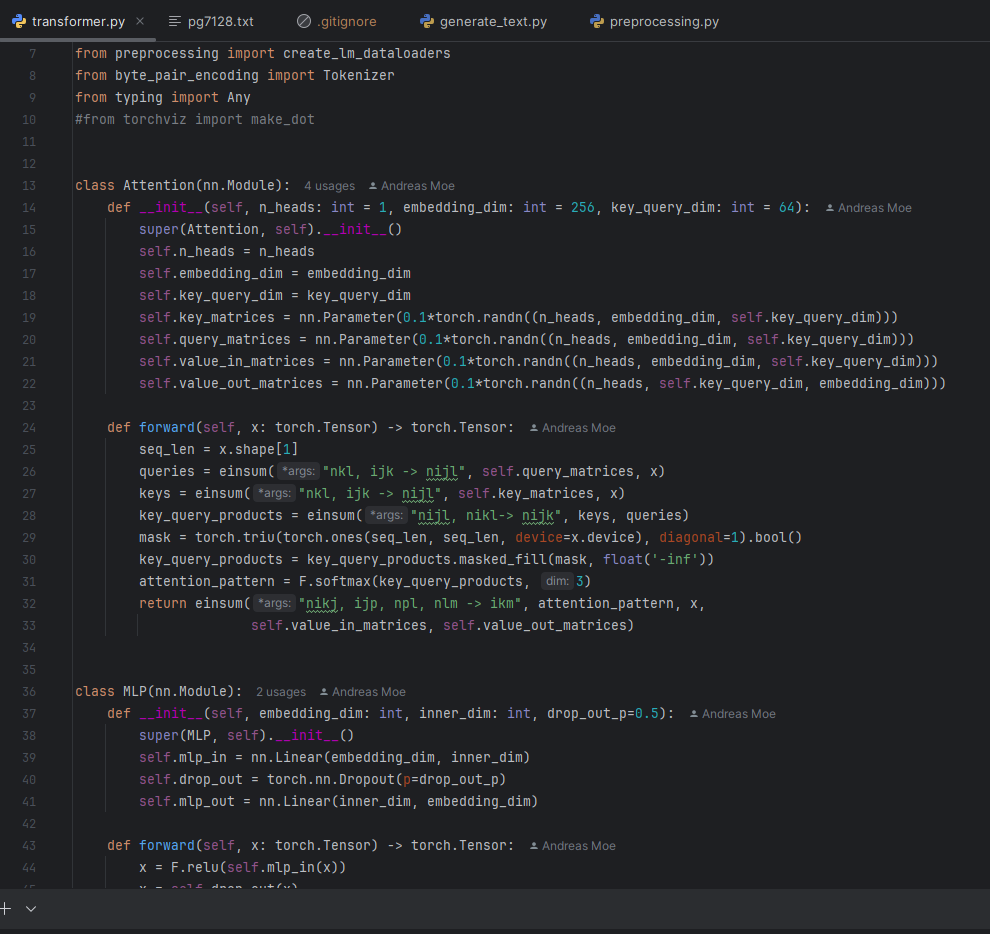

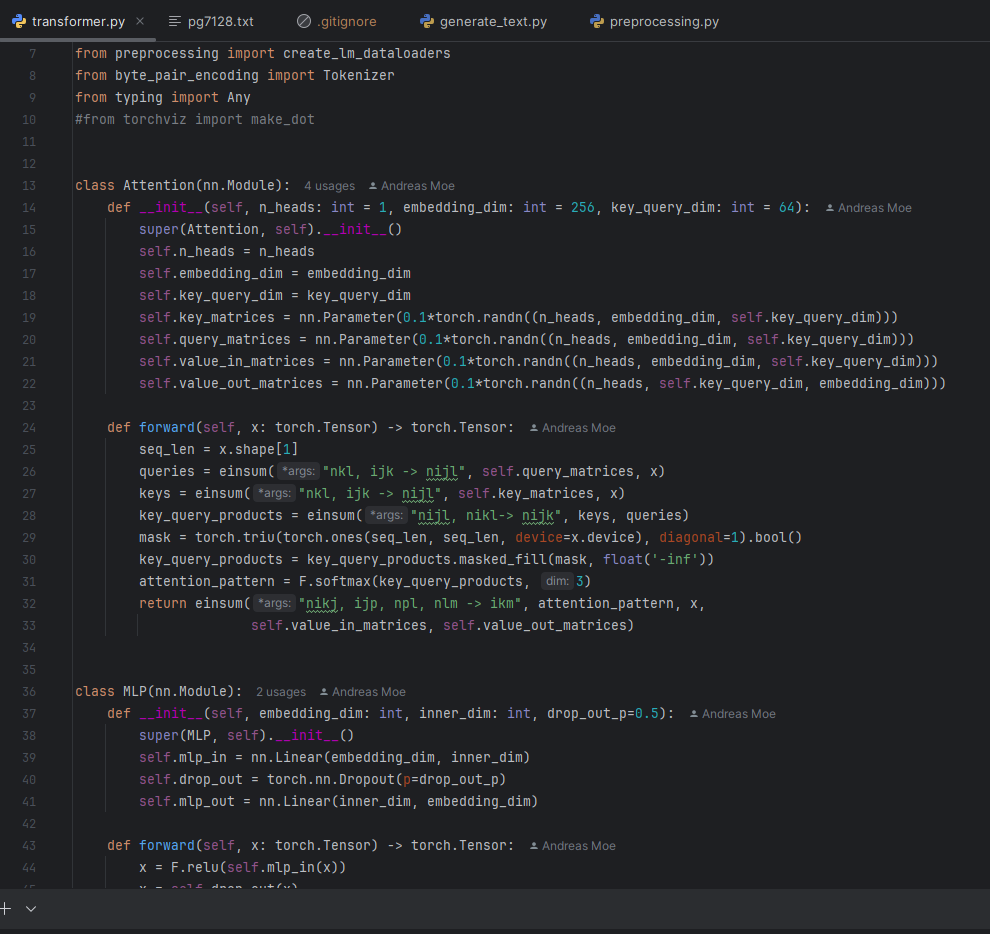

I made my own transformer in late 2024, using pytorch. I compiled my own small dataset from

Project Gutenberg, consisting of children's books.

I also made my own byte-pair encoder and tokeniser, in addition to the attention and mlp layers.

The resulting model is pretty terrible. Here is an example paragraph:

Prompt: "He "

He cut him into O

between, recly off he asted out them to castly,-son

charts. She grew in the lakeyeous old other, who suppose. And he looked as well old wel and to

to servers heart, too,

k.On of a simply at the poor birth, stood life room

turn food and beat to

King and followed, the mountains over on, on to --the to-water away. The young Thunable to

both farmer ance."What his pleasanty had even spatful

The code can be found here.